Similar functionality is provided by the Google Drive actor and many more. In Web Scraper, you can use fetch, the example is shown in the readme. PublicSpreadsheet: true // switch to false for authorized accessĬonst sheetRows = await Apify.call('lukaskrivka/google-sheets', sheetsActorInput)

For more information, check the readme of the actor. This actor allows us to use either public or authenticated (if we don't want to share our sheets) access. If we want to load a spreadsheet data from the code, we can do that by calling the Google Sheets actor.

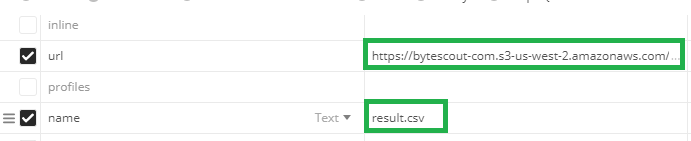

Csv url extractor code#

Actors can be "called" from anywhere in the code to load data, export data or perform some action. These actors handle a lot of complexity for you and give you a simple interface to use. You can also check out our public store for actors that help you integrate with various services. And we loop over the rows, check if it contains a valid URL and enqueue themĪwait context.enqueueRequest()ĭynamically loading from Google Sheets, Google Drive and other services Now we can parse the rows by splitting on each newline character and trimĬonst rows = csvString.split('\n').map((row) => row.trim()) We fetch the csv and convert the response to a stringĬonst csvString = await fetch(csvUrl).then((response) => response.text()) The fetch function is accessible out of the box. Let's say we want to fetch some CSV that we have on the web.

Otherwise, the browser may complain that you tried to access unauthorized external resources. Also, don't forget to switch on the Ignore CORS and CSP field in Proxy and browser configuration tab. To access an external resource, you have to use browser built-in functions like Fetch or JQuery's Ajax. This tool extracts a column from a Comma Separated Values (CSV) file. Web Scraper is a specific in that it doesn't allow you to access any external library (except JQuery) and all the code executes in browser context. There are infinite ways you can fetch something from a code. That's cool, you don't even have to structure it because the URLs are automatically recognized with regular expressions. The scraper will scan the provided resource and extract all URLs from it. Start URLs field of various scrapers has a neat option to upload arbitrary file from your computer or link from the web.

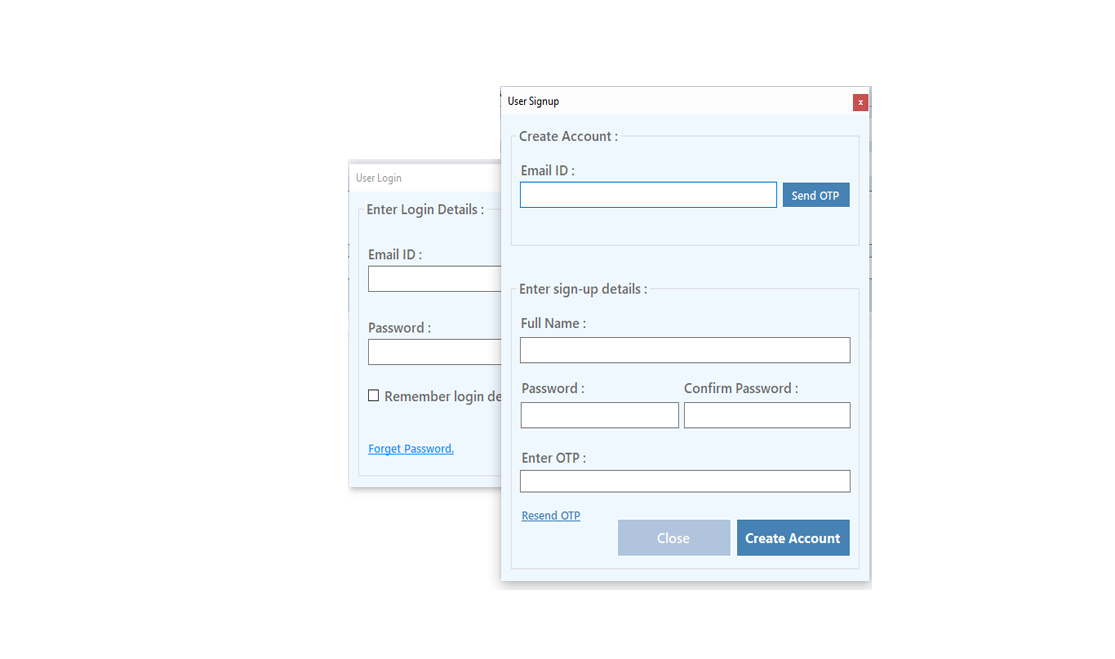

It can be a CSV file, database, application, cloud service, text file etc. All it has to have is a URL which can be used to fetch data using a request from the Page function or actor code. We're going to take a look at the third option.įirst, you have to prepare some external source of URLs. POST an array on Start URLs when starting the crawler via API and thus handle these settings dynamically from your application (guide for API integration here)įetch a list of URLs from an external source via the REST API from the Page function or actor source code You have several options for defining these URLs:ĭefine a static list of Start URLs in basic settings on the scraper configuration page Typically a scraper on Apify uses one or more Start URLs to start the crawling process. Note: If you want to just import a simple URL list from spreadsheet, check this article.

0 kommentar(er)

0 kommentar(er)